Human Motion Capture Benchmark

Welcome to the AIMove Benchmark !

We proudly present our benchmark for human movement analysis, designed to meet the needs of researchers and professionals seeking accurate and reliable data from real industrial operators and craftsmen. Our benchmark comprises seven unique datasets, each containing inertial-based motion capture data that can be used for a wide range of applications, including dexterity analysis, simulation, and virtual animation. Additionally, it is provided another dataset created for the ergonomic analysis of human movements, denoted as ERGD.

On the website, you will find a wealth of information about our benchmark, including details about each of the seven datasets, information on how to access and use our data, and current results achieved in gesture recognition and human motion generation.

Expert craftsmen recorded in their actual workshops.

Recordings of industrial workers in production lines.

Ergonomic analysis

Inertial-based MoCap system

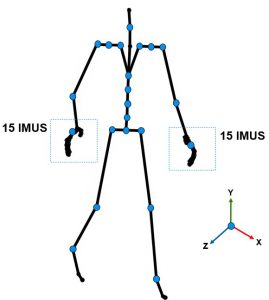

The BioMed bundle motion capture system from Nansense Inc. (Baranger Studios, Los Angeles, CA, USA) was utilized to capture the gestures of industrial operators and craftsmen.

The system comprises a full-body suit with 52 IMUs strategically positioned across the torso, limbs, and hands. At 90 frames per second, the sensors measure the orientation and acceleration of body segments on the articulated spine chain, shoulders, arms, legs, and fingertips. After a recording, the rotations on the X, Y, and Z axes were automatically calculated through the Nansense Studio’s inverse kinematics solver and stored in a Biovision Hierarchy format (BVH).

Citation

Don’t forget to give us a shoutout when using our datasets, we love to be cited! You can access our papers here: Olivas-Padilla2023, Olivas-Padilla2023, and Olivas-Padilla2021.

@ARTICLE{Olivas-Padilla2023,

author={Olivas-Padilla, Brenda Elizabeth and Glushkova, Alina and Manitsaris, Sotiris},

journal={IEEE Access},

title={Motion Capture Benchmark of Real Industrial Tasks and Traditional Crafts for Human Movement Analysis},

year={2023},

volume={11},

number={},

pages={40075-40092},

doi={10.1109/ACCESS.2023.3269581}}

- For our results in human movement analysis using the Gesture Operation Model (GOM), please cite:

@ARTICLE{Olivas-Padilla2021,

author = {Olivas-Padilla, Brenda Elizabeth and Manitsaris, Sotiris and Menychtas, Dimitrios and Glushkova, Alina},

title = {Stochastic-Biomechanic Modeling and Recognition of Human Movement Primitives, in Industry, Using Wearables},

journal = {Sensors},

volume = {21},

year = {2021},

number = {7},

article-number = {2497},

PubMedID = {33916681},

ISSN = {1424-8220},

doi = {10.3390/s21072497}}

@ARTICLE{Olivas-Padilla2023,

author = {Olivas-Padilla, Brenda Elizabeth and Glushkova, Alina and Manitsaris, Sotiris},

title ={Deep state-space modeling for explainable representation, analysis, and generation of professional human pose},

year = {2023},

archivePrefix = {arXiv},

arxivId = {2304.14502},

url = {https://arxiv.org/abs/2304.14502}}

- The code is accessible through our GitHub repository.

Competitions

Unleashing Human Motion Generation with Professional Movement Datasets

We are thrilled to announce the launch of an exciting new competition on Kaggle. The objective is to build models capable of generating realistic and coherent motion sequences, emulating the intricate dynamics of the human body.

For detailed information, please visit our dedicated competition page on Kaggle.

The Multi-Class Recognition of Professional Gestures

New competition coming soon for Gesture Recognition! Stay tuned for further updates on the competition launch date, rules, and guidelines.

Acknowledgments

The research leading to these results has received funding from the CARNOT Projet Fédérateur “Usine responsable” and the Horizon 2020 Research and Innovation Programme under Grant Agreement No. 820767, CoLLaboratE project, Grant No. 822336, Mingei project, and Grant No. 101094349, Craeft project.

We would like to thank Jean-Pierre Mateus, the European Center De Recherches Et Formation Aux Arts Verriers, the Pireaus foundation, the Haus der Seidenkultur museum, and the Romaero and Arçelik factories for contributing to the creation of the datasets.